Secure your Terraform deployment on AWS with Gitlab-CI and Vault (pipeline side)

We saw in previous posts how to use Hashicorp Vault for the centralization of static, dynamic secrets or for Encryption as a Service. In this article we will go further and see how to secure your Terraform deployment on AWS through Gitlab-CI and using Vault. This article will focus to the use of secrets at our CI level.

Prerequisites

Note : At the time of writing this article, Vault is in version 1.6.3.

Another clarification: We are based on the free version of gitlab.com and Gitlab Community Edition (CE).

Prerequisites :

- Vault: basic understanding such as authentication and types of secrets.

- AWS: IAM (role, assume role, etc) and EC2 (metadata, instance profile, etc) will be seen but do not require an advanced level. However, it is preferable to have a knowledge base on AWS.

- Gitlab-CI: the fundamentals on the CI part of Gitlab (gitlab-ci.yml, pipeline, etc).

- Terraform: the fundamentals will be sufficient.

Finally, this post follows on from the post How to reduce code dependency with Vault Agent.

The challenge of our CI ?

CI / CD is widely used in Infrastructure as Code (IaC) and cloud platform application deployments. These platforms offer deployment flexibility through their APIs.

However, how to give access to our CI in these environments in a secure way?

In this post we will focus, on the one hand, on Gitlab-CI for our CI and Terraform for the IaC, in order to be environment agnostic.

On the other hand, AWS is taken as an example but we can apply this logic to any other cloud provider.

A CI external to AWS, a factor of complexity

AWS has good integration between its services, especially when we stay in its ecosystem.

On the other hand, it is more complicated to use a CI external to AWS, Gitlab-CI in our case, which needs to use AWS to deploy / configure services.

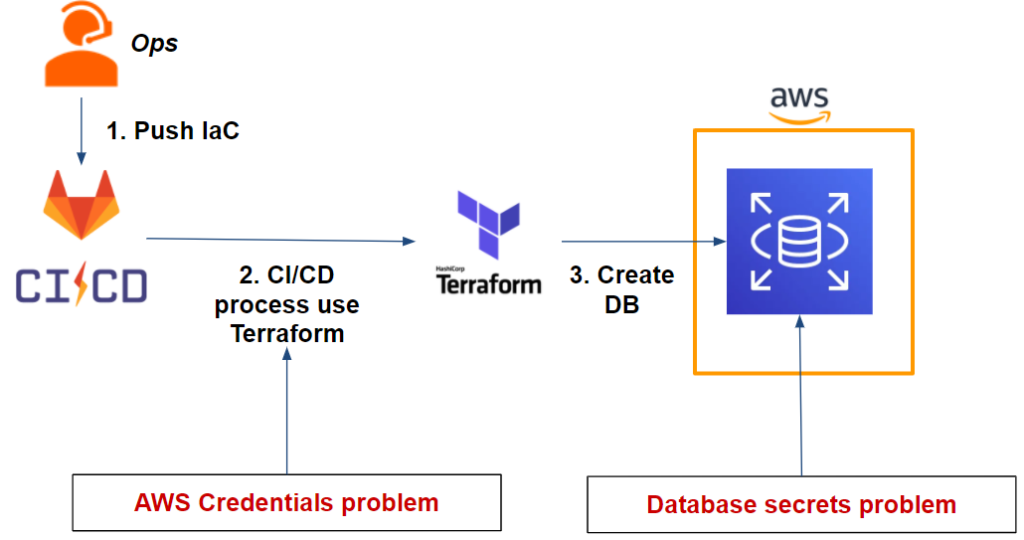

Take for example the following scenario:

We have an app on one EC2 instance containing a web server that uses a database MySQL on RDS. We want to deploy this infrastructure as IaC with Terraform using Gitlab-CI.

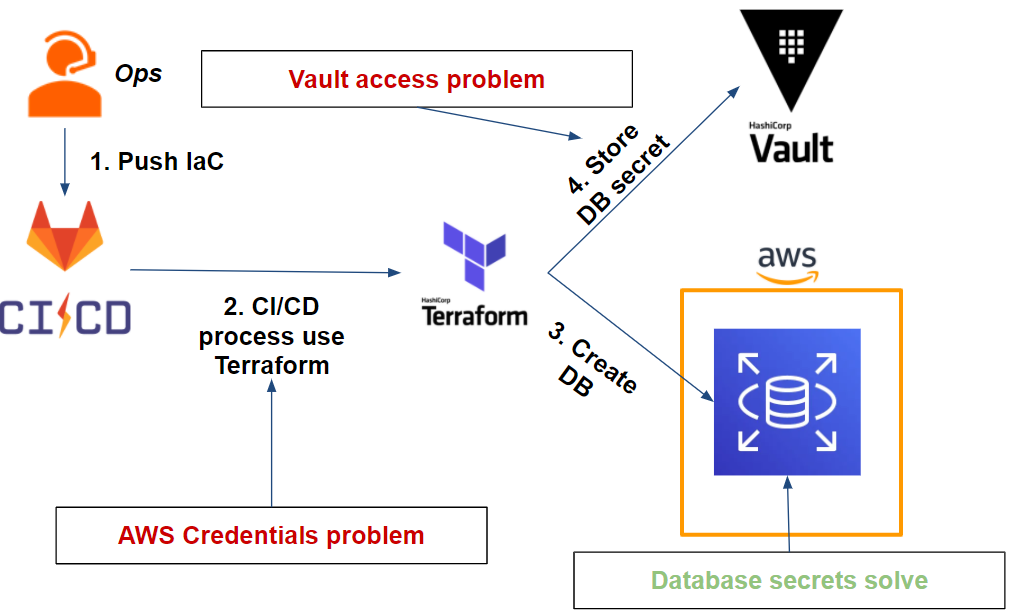

Which gives us the following workflow:

As we can see from our workflow, we have two issues:

- Our Gitlab-CI pipeline: Terraform needs AWS credentials to be able to deploy the RDS database on AWS.

- The database: We need to securely store the admin user credentials that will be generated after the database is deployed.

Trying to solve the challenge by thinking AWS

Let’s focus on our first issue regarding our Gitlab-CI pipeline and our AWS credentials. How can we solve this problem?

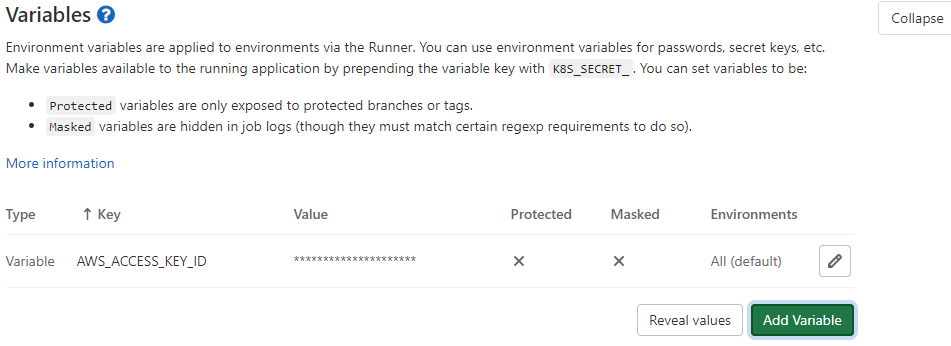

First attempt: the IAM user and environment variables

The easiest and fastest method to solve this problem is to create an IAM user and generate a pair of ACCESS KEY and SECRET KEY that we will put in our project’s environment variable.

In this way, our Terraform will be able to deploy the application infrastructure on AWS and in particular our RDS. This is possible because Terraform is able to retrieve AWS credentials via environment variables.

Indeed, this method is the easiest and fastest to solve the problem mentioned above but raises several questions:

- Credentials are static, so you have to set up a rotation mechanism.

- As a corollary, the rotation mechanism will have to have accesses between Gitlab-CI and AWS to make this rotation, which therefore leads to other questions around access / credentials

- It is difficult to have and set up credentials for each environment and isolate by GIT branch (ex: master, dev, features / *)

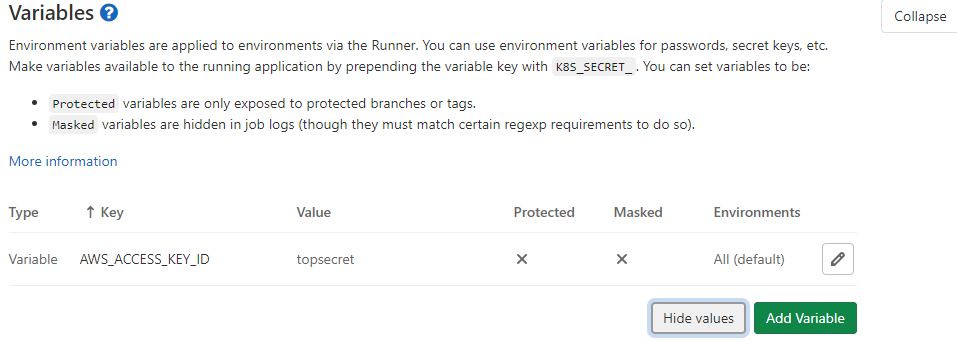

- One person with at least permissions Maintainer or Owner of a project is able to see / modify the CI / CD variables. Thus, some users who have a strong right to Gitlab but who are not necessarily legitimate in obtaining these application or CI secrets will be able to retrieve them or even modify them.

- Going further in the idea, a user able to retrieve a secret without the constraint of verifying its source (eg filtering on the source IP, etc.) will be able to use it outside the CI. This results in the inability to identify the actual user of this secret or even its legitimacy.

This situation can quickly cause us to lose control over access and use of secrecy:

Second attempt: use of an IAM role and / or AWS instance profile

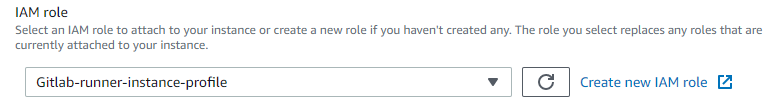

A second option is possible if your Gitlab Runner is on AWS.

Going back to our first hypothesis, we can delete our IAM user as well as his credentials on the Gitlab CI / CD variable side and create an IAM role.

The goal is for our Gitlab Runner to assume the IAM role in question in order to benefit from temporary credentials.

If our Gitlab Runner is on an EC2 instance, just put a profile instance:

If it is on an AWS ECS (Elastic Container Service), we will have to assign an IAM role to our container.

it is also possible to do the same with EKS (Elastic Kubernetes Service).

The issues raised in the previous attempt are resolved however:

- Credentials apply at the Gitlab Runner level. This means that all Gitlab-CI projects that have access to this container / Gitlab Runner will benefit from the same IAM privileges. However, we seek to have least privilege for each project.

- Even if we are looking to do a Gitlab Runner per project, it is difficult to isolate the privileges of the Gitlab Runner for each environment or per GIT branch (ex: master, dev, features / *)

- This solution is only usable if we take into consideration the possibility of being able to deploy our Gitlab Runner on AWS (which is not the case for all).

As we can see after this second test, the real challenge is not to give AWS access to our Gitlab Runner or our project but rather to our pipeline / Gitlab-CI for a specific environment with a mechanism of least privilege (especially from a cloud agnostic perspective).

In addition, we have not yet addressed the issue of database secrets.

Vault, the solution to our challenge

As we have seen, it is difficult to authenticate a pipeline or even a Gitlab-CI job in order to allow access to our least privilege secrets in a secure manner. HashiCorp Vault allows us to meet this need in a unified, cloud-agnostic fashion.

Let’s take a look at our workflow again, this time adding Vault:

First, Vault allows us to store our database secrets and taking care of the rotation. We will discuss this in more detail later in our article.

Secondly, it is possible to Vault to generate AWS credentials and dynamically. However, it raises a new issue that is common to our previous question: how can we allow a Gitlab-CI job to use and store secrets in Vault?

In order to solve this problem with Vault, we will address 3 points that we will resolve as we go along:

- Vault must generate AWS secrets dynamically: through the secret engine AWS, we will see how Vault can generate at least privilege credentials on several different target AWS accounts.

- Our pipeline (Gitlab-CI) must, on a specific branch, authenticate with Vault: through the JWT type authentication method, it is possible to authenticate our pipeline on a specific branch with Vault.

- Our pipeline must be able to use and store secrets in Vault: Our pipeline will, once authenticated to Vault, retrieve its AWS secrets and store the database secrets.

To do this, we will use Terraform to configure our Vault through the Vault provider.

All the code on which this article is based for the demonstration can be found on this GitHub repository.

Generating dynamic secrets with Vault

Our first objective is to ensure that our Vault is able to generate dynamic AWS secrets with least privilege.

Vault use AWS secret engine which is able to generate 3 types of AWS credentials:

- IAM user: Vault creates an IAM user and generates and then returns its programmatic accesses (access key and secret key).

- Assumed role: Vault will assume an IAM role and return the session credentials (access key, secret key and session token).

- Federation token: the credentials returned are the same as assumed role IAM but this time for a federated user.

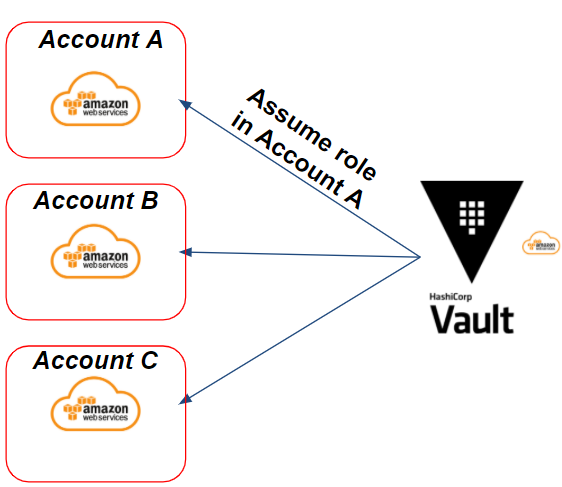

Among the 3 methods, we will see that of the IAM assume role which will be particularly useful in our use case of AWS multi-accounts.

Consider the following example: We have several target AWS accounts on which Vault should be able to generate AWS credentials through an assume IAM role. This will give the following scenario:

In our example, we need to generate credentials on the target AWS A account. Vault will assume an IAM role on the targeted A account in order to generate dynamic AWS credentials.

Preparing IAM Roles on the AWS Side

To do this, Vault must have AWS credentials and permissions to be able to perform its actions on AWS.

Regarding credentials, we have 2 scenarios:

- If Vault is in an AWS environment and on an EC2 / ECS instance: we can use the instances profiles in order to use temporary AWS credentials.

- If Vault is outside of an AWS environment : we will need to generate Access Key and Secret Key via an IAM user and rotate their credentials regularly.

Regarding permissions, HashiCorp takes the following policy as an example:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"iam:AttachUserPolicy",

"iam:CreateAccessKey",

"iam:CreateUser",

"iam:DeleteAccessKey",

"iam:DeleteUser",

"iam:DeleteUserPolicy",

"iam:DetachUserPolicy",

"iam:ListAccessKeys",

"iam:ListAttachedUserPolicies",

"iam:ListGroupsForUser",

"iam:ListUserPolicies",

"iam:PutUserPolicy",

"iam:AddUserToGroup",

"iam:RemoveUserFromGroup"

],

"Resource": ["arn:aws:iam::ACCOUNT-ID-WITHOUT-HYPHENS:user/vault-*"]

}

]

}

This example is useful if we want Vault to generate IAM users. However, this type of policy gives way too many rights compared to our need to assume a role.

The following policy is necessary to meet our need:

{

"Version": "2012-10-17",

"Statement": {

"Effect": "Allow",

"Action": "sts:AssumeRole",

"Resource": "*"

}

}

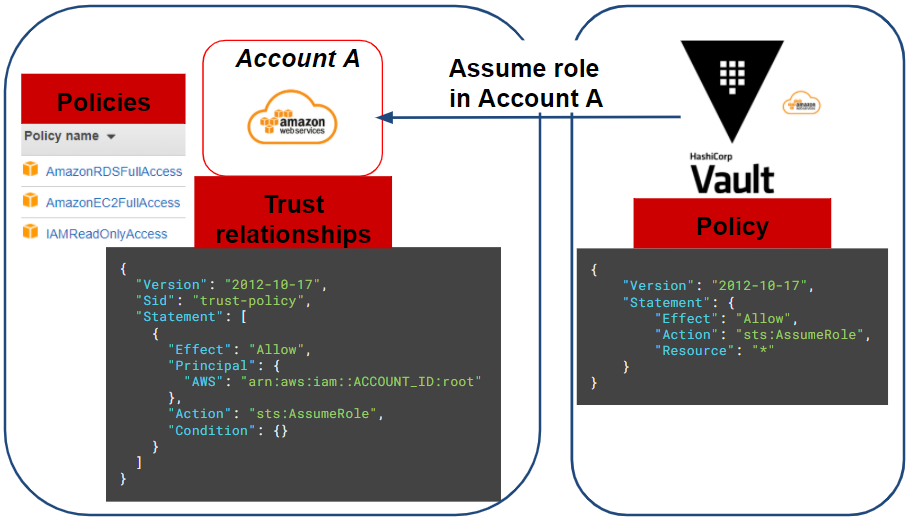

Once Vault has its programmatic access to the AWS source account and the necessary permissions, all we have to do is create the IAM role (or IAM roles if we have multiple target AWS accounts) that Vault should assume.

For our scenario, we will need to deploy an EC2 instance and an RDS type database. We will use the following policies (AWS managed) on the IAM role on our target AWS account (account A):

- AmazonRDSFullAccess

- AmazonEC2FullAccess

- IAMReadOnlyAccess

This policy will be limited later through our Vault for our application.

Finally, the IAM role of the target account must have a trust relationships to authorize Vault to assume this one:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::ACCOUNT_ID:root"

},

"Action": "sts:AssumeRole",

"Condition": {}

}

]

}

To summarize all of our actions, the following diagram represents our current situation:

Configuring the Vault role

Now that we have configured the AWS part, all that remains is to configure our Vault via Terraform :

resource "vault_aws_secret_backend" "aws" {

description = "AWS secret engine for Gitlab-CI pipeline"

path = "${var.project_name}-aws"

region = var.region

}

resource "vault_aws_secret_backend_role" "pipeline" {

backend = vault_aws_secret_backend.aws.path

name = "${var.project_name}-pipeline"

credential_type = "assumed_role"

role_arns = [var.application_aws_assume_role]

policy_document = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ec2:*",

"rds:*"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"iam:GetUser"

],

"Resource": "arn:aws:iam::*:user/$${aws:username}"

}

]

}

EOF

}

Here we create our AWS secret engine and a Vault role that will assume the IAM role created beforehand on the target AWS account.

We notice, in the policy document of the Vault role, that we can restrict the rights of our AWS session (credential) through Vault. Here we give an administrator right on EC2 and RDS which can be reduced as needed.

Finally, to test our credentials, we need to run the following Vault command, which should return our AWS session credentials to us:

# Vault admin privilege (only for test)

$ vault write aws/sts/web-aws ttl=1m

Configure authentication for our Gitlab-CI pipeline with Vault

How to authenticate a Gitlab-CI job?

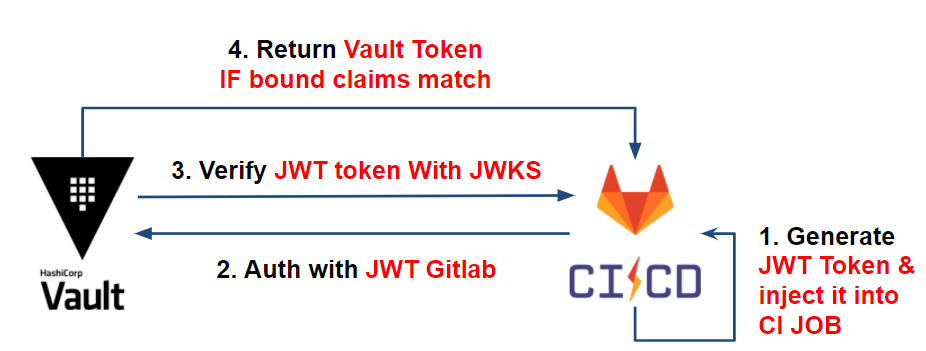

Each JOB in Gitlab has a JWT (JSON Web Token) token accessible via the environment variable CI_JOB_JWT.

This JWT token contains information (bound claims) regarding our Gitlab-CI project JOB (project ID, issuer, branch, etc.) on which Vault can be based to identify our pipeline. This is encoded in RS256 and signed by a private key managed by the issuer: Gitlab-CI.

How does it work with Vault?

To verify the authenticity of the JWT token information, Vault will rely on the JSON Web Key Sets (JWKS) the issue containing all the public keys used to sign the JWTs.

Once the authenticity of the token has been proven, Vault will verify the information in the JWT (bound claims) and compare these with the expected information (which we configure below), such as: the project ID, the issuer, the targeted branch, etc.

Once the token information corresponds to Vault’s expectations, it delivers its token with the correct policy to the CI thus allowing the retrieve of its secrets.

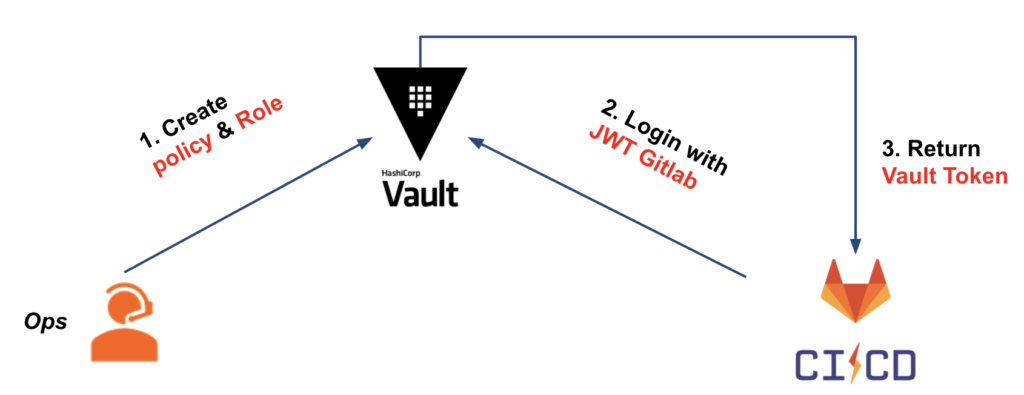

Which gives us the following workflow:

For more information, you can consult the following articles:

- External management of CI secrets

- Authenticate your CI and read its secrets with HashiCorp Vault via Gitlab-CI

Practice with Vault

We have seen the JWT authentication between Vault and Gitlab-CI. Now, let’s take a look at the configuration on the Vault side.

For our use case, we have the following diagram:

To be able to configure our Vault, we will create:

- JWT authentication method

- Vault role based on JWT authentication on which we will verify that the bound claims meet our criteria

- The Vault policy attached to the Vault token, which will be delivered to our CI and in particular giving the necessary rights to retrieve the secrets (AWS secret)

Regarding our first two points, we have the following Terraform configuration :

resource "vault_jwt_auth_backend" "gitlab" {

description = "JWT auth backend for Gitlab-CI pipeline"

path = "jwt"

jwks_url = "https://${var.gitlab_domain}/-/jwks"

bound_issuer = var.gitlab_domain

default_role = "default"

}

resource "vault_jwt_auth_backend_role" "pipeline" {

backend = vault_jwt_auth_backend.gitlab.path

role_type = "jwt"

role_name = "${var.project_name}-pipeline"

token_policies = ["default", vault_policy.pipeline.name]

bound_claims = {

project_id = var.gitlab_project_id

ref = var.gitlab_project_branch

ref_type = "branch"

}

user_claim = "user_email"

token_explicit_max_ttl = var.jwt_token_max_ttl

}

As for the configuration of our JWT auth backend, this remains fairly standard and requires knowing the domain name of our Gitlab (eg: gitlab.com).

On the JWT auth backend role side, we have the bound_claims which are the criteria to be met to authorize the authentication of our CI, such as:

- project_id: the Gitlab project number. Probably the most decisive element in order to only allow our project to authenticate.

- ref: in our case, the branch on which the CI is running. Here we will take master.

- ref_type: the type of the reference. In our case, our references will be branches GIT.

Finally concerning the policy assigned to our Vault role, we have 4 path allowing our pipeline to:

- aws/sts/pipeline : retrieve its AWS secrets.

- db/* : store database secrets for our project.

- auth/aws/* : to allow the project to authenticate to Vault through an AWS authentication method. We will see this part at the application level.

- auth/token/create : to create child Vault tokens. Indeed, Terraform, through the Vault provider, generates a child token with a short TTL. To do this, we need to assign this path with the “Update” capabilities to allow Terraform to perform this action. This action is unique to Terraform and you will find additional information on the subject in the documentation.

At this stage, we are able to authenticate and give access to our CI with Vault.

Use our secrets in our CI

Now that it’s possible for our CI to interact with Vault, let’s take a look at running our CI.

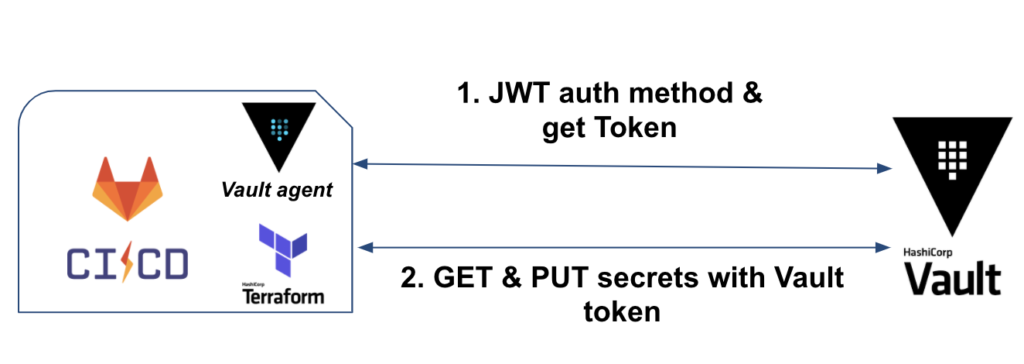

Here is the workflow we will have when running our CI:

First, we will have the binary Vault (or Vault in agent mode) which will take care of the authentication and retrieve of the Vault token.

Second, Terraform will be used to retrieve AWS credentials, deploy IaC and store database secrets.

CI job authentication with Vault

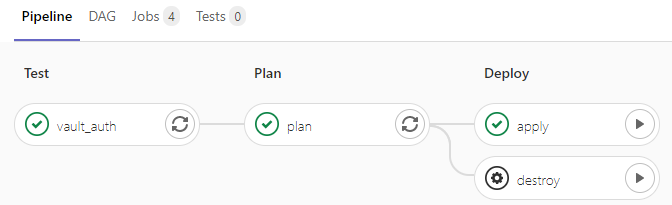

Regarding our CI jobs, we will configure our gitlab-ci.yml, so as to:

- Install Vault and Terraform in a before_script so that the tools are available on all of our jobs. To save time, you can build your own CI image to avoid installing them each time you start a job.

- Have a first test job for JWT authentication via Vault

- Once the authentication is tested and functional, have 2 sequential jobs: terraform plan & terraform apply / destroy

About our test job, we have the following snippet :

image: bash

variables:

TF_VAR_vault_role: web-pipeline

TF_VAR_vault_backend: web-aws

vault_auth:

stage: test

script:

- export VAULT_TOKEN="$(vault write -field=token auth/jwt/login role=$TF_VAR_vault_role jwt=$CI_JOB_JWT)"

- vault token lookup

Concerning our job vault_auth we execute 2 commands at the script level:

- The first is to authenticate to Vault via the JWT method.

- We specify the role we want to use. Here the role is web-pipeline that we configured previously.

- We use the environment variable CI_JOB_JWT provided by Gitlab-CI for our job. This variable contains the JWT token used to authenticate our CI job with Vault.

- Finally, we retrieve the Vault token that we put in the environment variable VAULT_TOKEN. This will be used again by Terraform for the rest of its actions.

- The second line

vault token lookupallows you to check the duration of our Vault token, the attached policies, etc.

Using secrets with Terraform

We have two kinds of secrets where Terraform, in our CI level, must use:

- AWS: retrieving the credentials of the AWS session via an assume role in order to deploy the IaC. In our case: an EC2 instance and an RDS database.

- Database: store our database secret into Vault. In our case: the administrator user of the database.

For AWS secrets, Terraform is able via the Vault provider to retrieve its credentials :

data "vault_aws_access_credentials" "creds" {

backend = "aws"

role = "web-pipeline"

type = "sts"

}

provider "aws" {

region = var.region

access_key = data.vault_aws_access_credentials.creds.access_key

secret_key = data.vault_aws_access_credentials.creds.secret_key

token = data.vault_aws_access_credentials.creds.security_token

}

Regarding database secrets, once the RDS is created, we establish the connection between Vault and it, allowing Vault to perform its actions on the database through the database user admin:

resource "vault_database_secret_backend_connection" "mysql" {

backend = local.db_backend

name = "mysql"

allowed_roles = [var.project_name]

mysql {

connection_url = "${aws_db_instance.web.username}:${random_password.password.result}@tcp(${aws_db_instance.web.endpoint})/"

}

}

If you want to rotate the administrator password to ensure that only Vault is aware, you can run the following command:

# Vault admin privilege (only for test)

$ vault write -force web-db/rotate-root/mysql

And finally, so that the application can use its database secrets, we create a Vault role on which it will create a user in readonly on the database with a defined duration (preferably short):

# Create a role for readonly user in database

resource "vault_database_secret_backend_role" "role" {

backend = local.db_backend

name = web

db_name = vault_database_secret_backend_connection.mysql.name

creation_statements = ["CREATE USER '{{name}}'@'%' IDENTIFIED BY '{{password}}';GRANT SELECT ON *.* TO '{{name}}'@'%';"]

default_ttl = var.db_secret_ttl

}

We can test, once the project has been deployed through the CI, our application secrets in this way:

# Vault admin privilege (only for test)

$ vault read web-db/creds/web ttl=1m

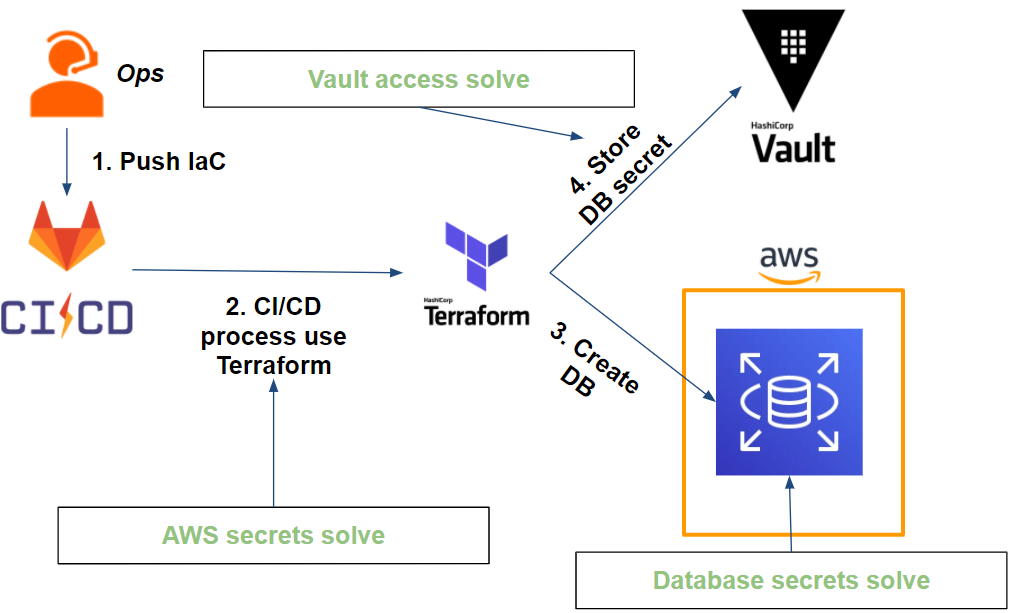

At this stage, we have succeeded in responding to our various issues for our CI. Which gives the following result:

The expiration and rotation of our CI secrets

So far, we have managed to set up a CI through Gitlab, Terraform and Vault that addresses our various issues.

Which gives the following workflow:

If access to our secrets is secure, what about rotation?

- Access to the Vault which is done via the Vault token: the Vault CLI takes care of authenticating each time a Gitlab-CI job is launched. In other words, the Vault token is unique per job (disposable token) and has a TTL limited in time (eg: 1min).

- AWS secrets : Terraform retrieves AWS secrets (session assumed through a Vault role) at each execution. The secrets are unique to each execution and limited in time (eg: 5min).

- Database secrets are on 2 levels:

- The administrator database user is stored in Vault and its password is changed by Vault. At this stage, only Vault is able to know the credentials (excluding Vault administrators)

- The application database user is generated at the request of the application and has a time-limited TTL (eg 1 hour). The secret in question is a user in readonly and therefore in limited rights.

We have seen how to solve our CI level issues, but it does not stop only with the deployment of our application infrastructure.

Indeed, it remains for our application to retrieve its secrets through Vault. How can we respond to this issue as transparently as possible?

HashiCorp Gitlab Terraform Gitlab-CI Vault CI/CD AWS Amazon Web Services Runner Jobs JWT Authentication method Least privileges Vault Agent Terraform provider Secret as a Service Application Database Pipeline

3431 Words

2021-03-01 16:30